4'000 Stars and counting, a trip down memory lane

A trip down memory lane & the history of Docker, Compose and monitoring w/ Docker & Prometheus. Along the way, I discovered an amazing community.

I was lucky enough to be one of the first people to embark on the Docker journey to try and containerize a monitoring stack in 2014. I became aware of Docker in early 2014 and spent months figuring out how it worked and in engineers' DM's fixing bugs and asking tons of questions.

There were just a handful of people in a chat at this time, and the community was so small we knew everyone.

As a bit of a monitoring and data geek, I was tasked with building a more adaptable monitoring solution for our new Cloud venture in 2014. Initially, I was trying to rebuild current monitoring solutions in a Docker container like Nagios before it was container-friendly, Centreon, Piwik analytics, and many other tools that were not solving my problem. I stumbled onto InfluxDB with Grafana but quickly realized it didn't meet our use case.

After much trial and error with different tools, I stumbled upon the Soundcloud infrastructure blog and how they built a time-series monitoring tool written in Go using a microservice architecture.

At the time, this seemed so advanced and far-fetched that it didn't even seem real. Here's the blog post, if I'm not mistaken, that kicked off Prometheus to the public - https://developers.soundcloud.com/blog/prometheus-monitoring-at-soundcloud

First was containerizing all things.

Believe it or not, back in 2014, this was pre-orchestrator times. Most people were stringing together a bunch of single Docker run commands with bash scripts and trying to "orchestrate" containers with bash.

Luckily in 2015, a company named Orchard was busy building the first Docker container orchestrator called "fig". Fig was an absolutely amazing tool.

You can write some weird code called YAML, which I have never seen before this point in time, and basically, string together all your Docker run commands into a single file, allowing you to build microservices into a single file...

Shortly after I started using Fig and getting up to speed, Docker announced they had acquired the parent company, Orchard. You can also see a nice snapshot of history in the Docker Compose version history when Fig joined Docker in October 2014.

A few months later, Docker renamed Fig to Docker Compose in February 2015, a milestone I will never forget in container history. Afterward, I figured out how to put all my favorite monitoring tools into a single compose file.

I started using Prometheus, Grafana, Node Exporters, and Google cAdvisor. It took me a few weeks to figure out networking inside of compose as, again, this was early days, and many things we take for granted today were not available yet. Getting the containers to talk to each other, configurations, mounting storage, etc., with multiple services was a mission, but it worked.

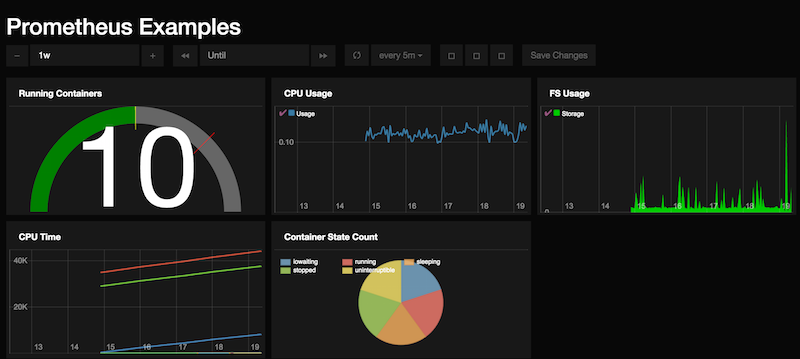

Below you can see the first dashboard I created with Prometheus and Grafana. It wasn't pretty, but hey, it was working together in Docker Compose, and it was also monitoring containers and infrastructure.

After it was up and running, I blogged about it a few times, and it gained a ton of attention from Docker, Google, and the entire community. Google reached out and asked me to present at their headquarters in Zurich, Switzerland, to explain what I was building.

I don't know if I was the first person to create a complete monitoring stack using Docker and Compose. Still, I was the first one to create a detailed manual, easy one-click deploy guides, Docker monitoring workshops, and hit the conference circuit, training 100's of people how to get started with Docker and Monitoring. Which quickly expanded to becoming one of the first Docker Captains, writing books, taking part in interviews, and being an integral part of the Docker community early on.

It was always important for me that the goal of the Docker Prometheus project was to enable others and give back to the community based on the original article which kicked it all off "How to setup Docker Monitoring".

Open Source is so important not only for writing code but also to enable, teach, and mentor others. I believe this project helped kickstart some other projects and organizations to go on to build amazing things.

The next thing I knew, the Docker Prometheus, GitHub project had 1k stars, then 2k, and now 4k. GitHub stars used to mean something earlier; some companies even used the number of GitHub stars as a pitching metric to VCs. It is just a vanity metric, but I still like to check in and reflect occasionally.

It has been a fantastic journey that is not close to being done. I encourage everyone to help give back to Open Source however they can, from writing code to fixing documentation. Every single contribution helps.

Thanks to Solomon Hykes, Scott Johnston, Bret Fisher, Laura Tacho, Julien Bisconti, all the Docker Captains, everyone at Docker, and the entire Docker community.

FAQ: 4000 Stars and Counting – A Trip Down Memory Lane

1. What is the significance of reaching 4000 stars on GitHub?

Reaching 4000 stars on GitHub is a significant milestone that signifies the popularity and usefulness of a project. It indicates a high level of interest and engagement from the developer community, reflecting the project's impact and success.

2. How did you achieve 4000 stars on your GitHub project?

Achieving 4000 stars involved consistent updates, active community engagement, and ensuring the project addressed real-world problems. Regularly adding new features, fixing bugs, and maintaining clear documentation also played a crucial role.

3. What lessons have you learned from maintaining a popular GitHub project?

Maintaining a popular GitHub project has taught me the importance of community feedback, the need for clear documentation, and the value of regular updates. It's also crucial to balance new features with stability and performance improvements.

4. How can others grow their GitHub projects to reach more stars?

To grow a GitHub project, focus on solving real problems, engaging with the community, and maintaining high-quality code. Promoting your project through blogs, social media, and collaborations with other developers can also help increase visibility and stars.

5. What tools and resources have been essential in managing your GitHub project?

Essential tools and resources include continuous integration tools, issue trackers, and automated testing frameworks. Platforms like GitHub Actions, Travis CI, and community forums have been invaluable in managing contributions and maintaining code quality.

Follow me

If you liked this article, Follow Me on Twitter to stay updated!