Run LLM Models Locally with Docker

From Docker’s new Model Runner to Google’s AI Overview shaking up search and a brewing A2A standards battle — this week was a marathon. Toss in some DIY ChatGPT action figures and you’ve got the perfect mix of chaos and creativity.

Good morning! This week has been a year-long. I'm pretty confident that if I still had hair, I would've lost it again.

All the best things come from downturns. Let's focus on the positives and get to work.

Quick hitters, let's go!

Docker Model Runner

Docker has been busy lately.

Meet Docker Model Runner:

Stay Updated

Get actionable AI & tech insights delivered every Friday. No fluff, just value.

Subscribe to The Weekly Byte →- Run AI models with the tools you’re using today

- GPU acceleration on Apple Silicon

- Pull models as OCI Artifacts

- No extra setup, just dev

A2A Beach Front Avenue

Oh wait, wrong decade. You may have heard of the recent MCP Server craze to standardize how LLMs interface with applications. Well, Google just slipped in another standard called A2A to try to create a standards battle.

Put the coffee on, as this will be an interesting battle.

Google's new AI Overview is killing search.

Killing them softly comes to mind, but for some, it is more drastic. The new Google AI Overview answers your Google search question and pushes all the other results down. This is good for Google but bad for anyone trying to rank on Google.

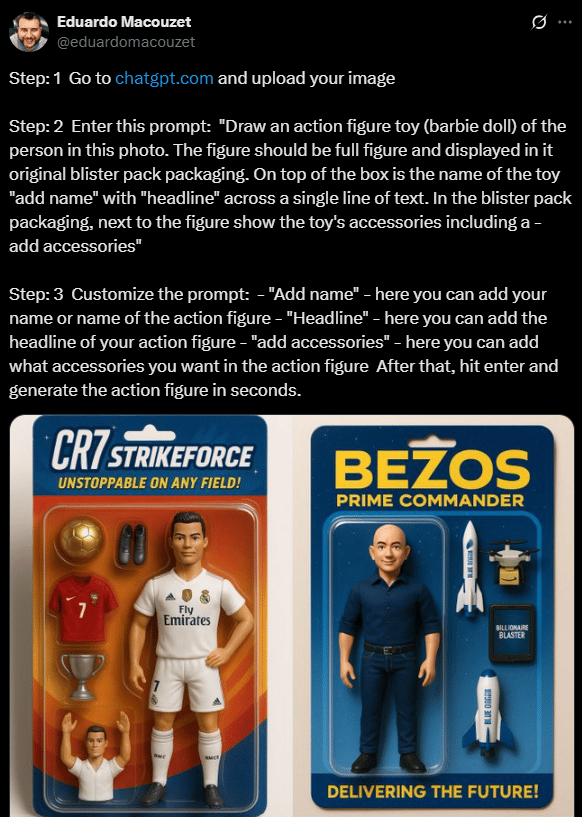

This week has been all about making ChatGPT Image action figures. So if you want to do it yourself, check out the steps below:

That's it for this week. I'm off next week and plan to deploy vacation mode for a few days!

…That’s this week’s theByte newsletter!

-Brian