Creating Cloud Agnostic Container Workloads

Learn how to create cloud-agnostic container workloads with this guide. Discover the benefits, tools, and strategies for deploying containers across multiple cloud platforms, ensuring flexibility, portability, and resilience.

The Cloud race continues down the path of least resistance. Least resistance you may ask. Yes, every day, the three major Cloud providers, Amazon AWS, Microsoft Azure, and Google GCP, make it easier to move workloads from your data center to the Cloud.

However, without proper research and preparation, your migration could land you right back where you started, in vendor lock-in jail and massive cloud costs.

The Cloud Native Computing Foundation (CNCF) is responsible for managing projects such as Kubernetes, Prometheus and ContainerD just to name a few of the growing list of Open Source projects that they manage.

However, CNCF doesn't stop with just being the Open Source projects steward. They are also forming a community that is developing common technical standards defining Cloud Native Architecture and Applications to ensure we protect ourselves from vendor lockin-in jail.

Cloud Native Mission Statement

OK, so how do containers play a role in Container Native Applications and Architecture? I'm glad you asked. Let's take a look at Mission Statement of the CNCF to get a better understanding:

Cloud Native systems will have the following properties:

of Cloud Native. Packaging applications into containers provides versatility, seamless migrations between Clouds, and, more importantly, portability. More portability permits more flexibility and moves further away from the vendor lock-in jail,

(a) Container packaged - Running applications and processes in software containers as an isolated unit of application deployment and as a mechanism to achieve high levels of resource isolation. Improves overall developer experience, fosters code and component reuse and simplify operations for Cloud Native applications.

(b) Dynamically managed - Actively scheduled and actively managed by a central orchestrating process. Radically improve machine efficiency and resource utilization while reducing the cost associated with maintenance and operations.

(c) Micro-services oriented - Loosely coupled with dependencies explicitly described (e.g. through service endpoints). Significantly increase the overall agility and maintainability of applications. The foundation will shape the evolution of the technology to advance the state of the art for application management, and to make the technology ubiquitous and easily available through reliable interfaces.

Containers are the key component of Cloud Native. Packaging applications into containers provides versatility, seamless migrations between Clouds, and, more importantly, portability. More portability permits more flexibility and moves further away from the vendor lock-in jail, which we are desperately trying to avoid.

Let's cover the next property in the CNCF missions statement, Dynamically Managed. In other words, the containers require an Orchestrator such as Docker Swarm or Kubernetes to manage, schedule, and provision containers across multiple hosts. Orchestrators allow us to create an easily reproducible environment across multiple cloud providers.

Finally, Cloud-Native Applications should be microservice-oriented. Since our applications are container-based, the next logical step is to break apart monolithic applications into microservice architectures. Microservices give us even greater flexibility and less dependence on the underlying infrastructure, thus moving us further in the direction of Cloud Native.

Why is Container Portability Important?

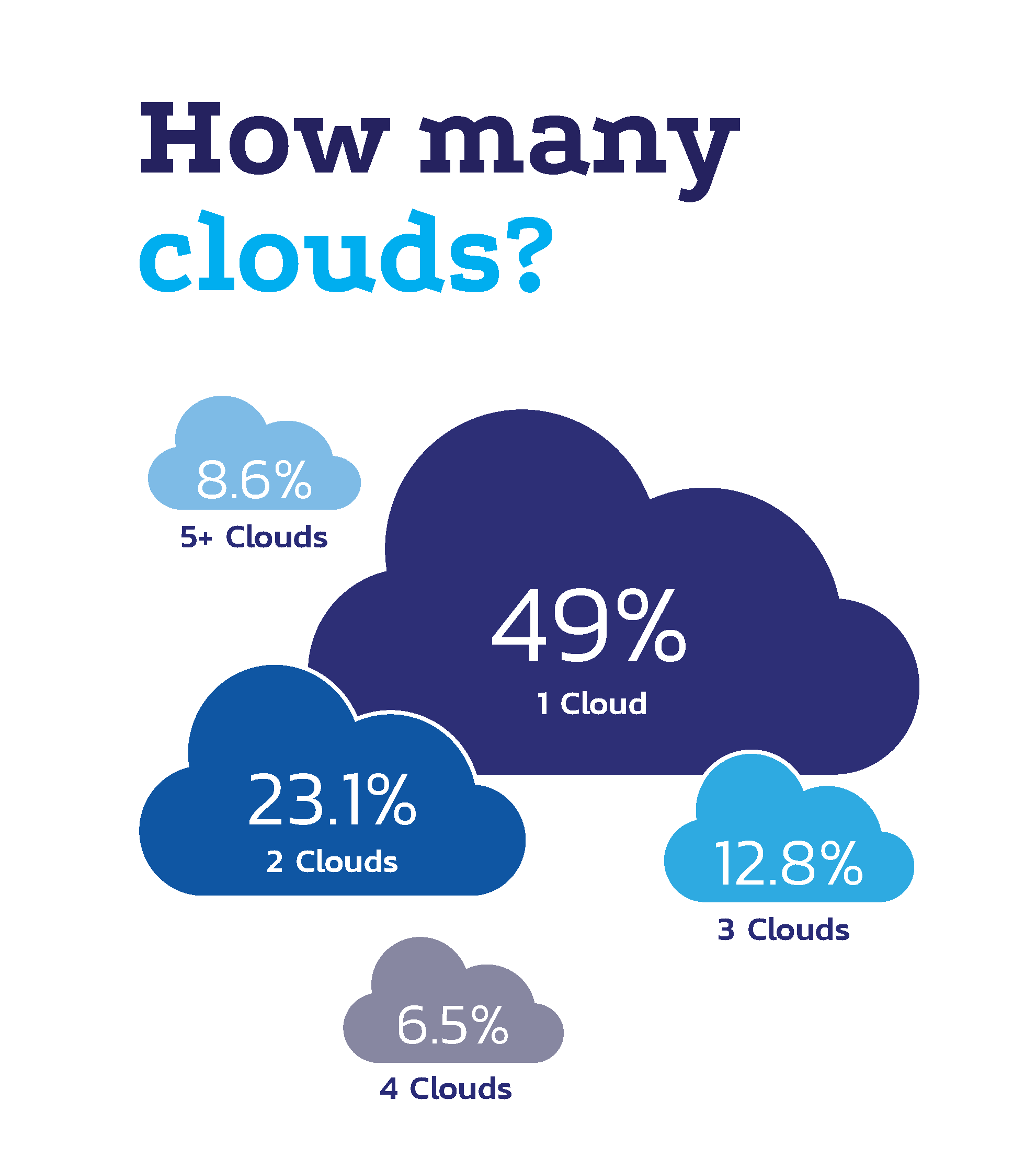

Recently, Cloudify released the results of a recent survey about the state of Enterprises using multiple clouds. What is interesting about the results is that 49% of the 600+ respondents utilize a single cloud, while 51% have two or more clouds. In the recent Enterprise projects that I worked on,

Ensuring that our applications/architectures adhere to the CNCF mission statement, such as containerized applications, dynamically orchestrated, and micro-service, gives us portability. With portable container workloads, we can quickly move our workload to other Cloud providers to take advantage of price reductions, increased capacity, new features, or disaster recovery.

Portable = Flexibile

What is typical between Clouds?

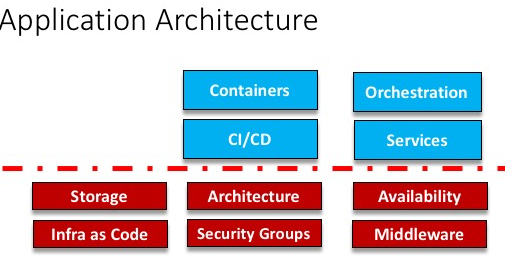

As more companies transition to Cloud, the need for the adoption of CNCF standards will increase. Since each Cloud provider uniquely exposes services, it is important to draw a line in the infrastructure indicating where we can differentiate Cloud provider-specific services versus standard services across all providers.

The graphic below displays where the divide could be between the Cloud provider specific components and cloud application shared components.

Conclusion

It has become overwhelmingly clear that conforming to the CNCF standards will not only increase flexibility and portability but also give us a way forward to properly adopting Multi-Cloud.

At the time of this writing, Amazon AWS and Oracle have just become members of the CNCF. This shows that the industry understands the need, and businesses and users like you and me are driving the standards.

FAQ Section: Creating Cloud-Agnostic Container Workloads

What does it mean to create cloud-agnostic container workloads?

Creating cloud-agnostic container workloads means designing and deploying containerized applications that can run seamlessly across different cloud platforms, such as AWS, Azure, or Google Cloud, without modification. This approach allows you to avoid vendor lock-in, enabling greater flexibility and portability of your workloads across multiple cloud environments.

Why is cloud agnosticism important in containerized environments?

Cloud agnosticism is important in containerized environments because it provides the freedom to move workloads between different cloud providers, optimize costs, and leverage the best features of each platform. It also enhances resilience by allowing workloads to be distributed across multiple clouds, reducing dependency on a single provider and mitigating risks associated with outages or price changes.

How can I make my container workloads cloud-agnostic?

To make your container workloads cloud-agnostic, focus on using open standards and technologies that are supported across multiple cloud platforms, such as Docker, Kubernetes, and Terraform. Avoid relying on proprietary cloud services that tie your application to a specific provider. Additionally, use multi-cloud management tools, abstract your infrastructure configuration, and implement CI/CD pipelines that can deploy to different cloud environments without requiring changes to the application code.

What tools and technologies support cloud-agnostic container workloads?

Tools and technologies that support cloud-agnostic container workloads include Docker for containerization, Kubernetes for orchestration, Terraform for infrastructure as code, and Prometheus and Grafana for monitoring. Multi-cloud management platforms like HashiCorp Consul and Kubernetes-based solutions like Rancher also help manage and deploy cloud-agnostic workloads across different cloud environments.

What are the challenges of creating cloud-agnostic container workloads, and how can they be overcome?

Challenges of creating cloud-agnostic container workloads include managing differences in cloud provider services, ensuring consistent performance across platforms, and handling complex multi-cloud networking. These challenges can be overcome by using abstraction layers that hide provider-specific details, implementing thorough testing in multiple cloud environments, and using cloud-agnostic networking solutions like Calico or Cilium. Additionally, automating deployment and management tasks with tools like Kubernetes Operators can help streamline operations across different clouds.

Follow me

If you liked this article, Follow Me on Twitter to stay updated!